Overview

The eyeCode experiment was designed to investigate what factors effect the comprehensibility of code. We asked 162 programmers to predict the output of 10 small Python programs, each with 2 or 3 different versions. 29 participants completed the experiment in front of a Tobii TX300 eye-tracker. The rest of the participants were recruited from Amazon's Mechanical Turk.

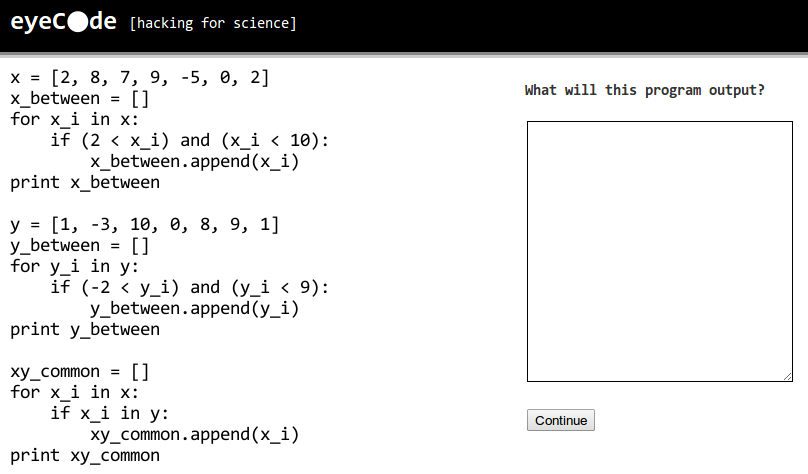

Here's a screenshot of the main screen that participants used to drill down into each trial:

An individual trial consisted of the program's code (unhighlighted) and a text box where the participant could write what they thought the program would output.

The presentation order and names of the programs were randomized, and all answers were final (i.e., no going back). Although every program actually ran without errors, participants were not informed of this fact beforehand. A post-test survey gauged a participant's confidence in their answers and the perceived difficulty of the task.